You’ve been there, going back and forth with your AI coding agent, where what you’re prompting just isn’t getting through to the agent.

Great, now I have to google the answer…

It makes sense too, humans are very capable of explaining needs, giving context, and the sort of meta-cognitive, shared understanding of a problem space when talking to each other. But when the person on the other end of the line isn’t a person, and is a statistical next character machine (re: LLM), those specifics are sometimes harder to convey.

My solution, /eval

I’ve been using Amp Code for some time, and I’ve now built an /eval prompt I can drop at the end of a thread once we finally get the problem fixed. Here it is in its entirety.

# /eval - Evaluate Prompting Quality

Provides honest, critical feedback on prompting style and effectiveness.

## Evaluation Criteria

- **Clarity**: Were requests clear and unambiguous?

- **Specificity**: Did requests have enough detail (measurements, scope, context)?

- **Efficiency**: Was anything redundant or could be stated more concisely?

- **Context**: Were visual references, testing context, or screenshots provided?

- **Scope**: Were distinctions (mobile vs desktop, device types) stated explicitly?

## Response Format

Assessment includes:

1. Strengths - what worked well

2. Weaknesses - areas for improvement

3. Actionable improvements - specific recommendations

4. Minimal viable prompt - the most concise single request that would have accomplished the task in one exchange

No flattery. Direct, honest assessment only.It started as a way for me to better understand how I’m prompting, but I quickly realized I could just ask for the MVP, the Minimum Viable Prompt that could have gotten what I wanted in one shot.

Here’s a recent example.

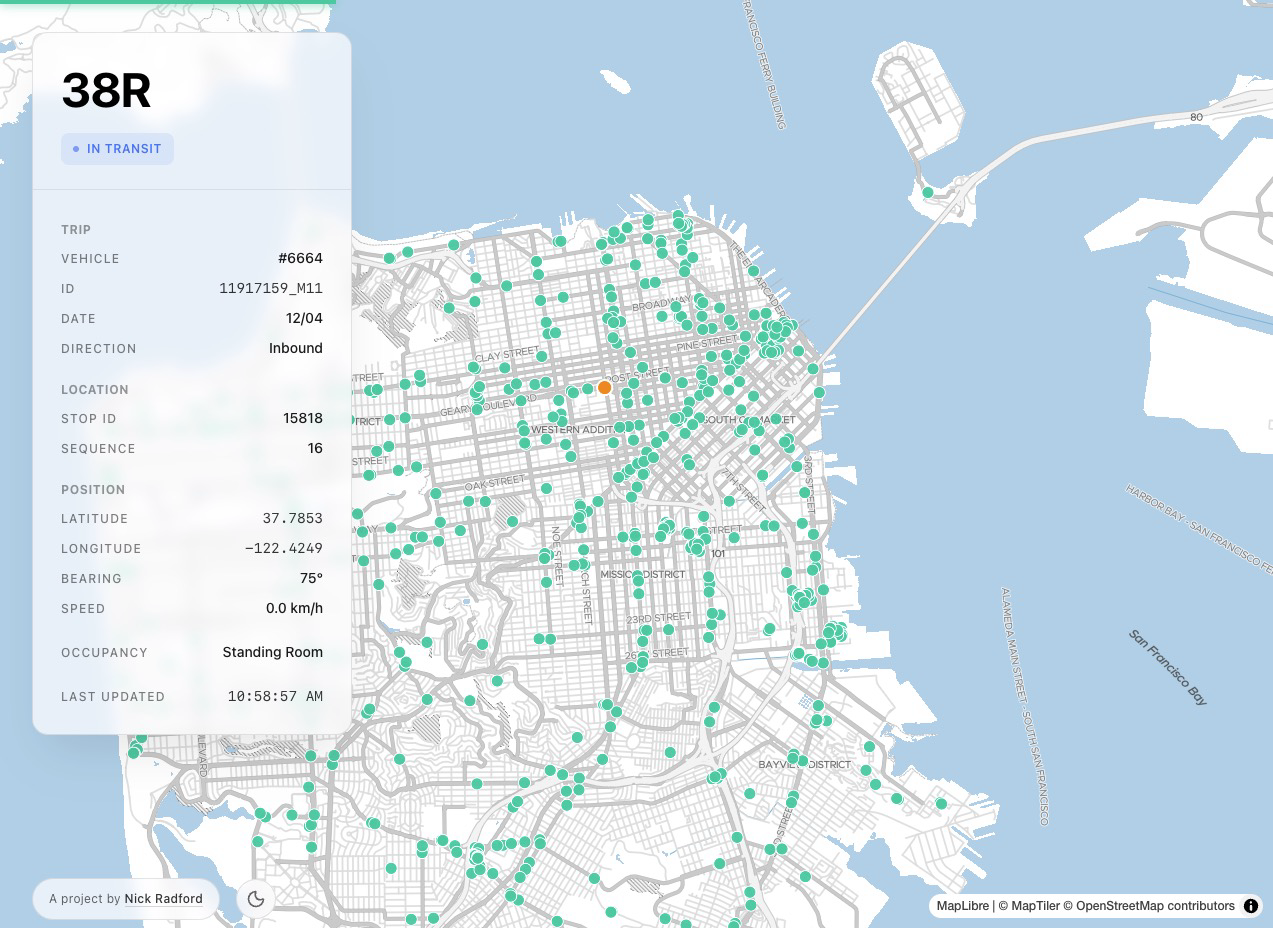

For my Creative Coding Intensive, I’m building an art piece that uses transit data. So to help me visualize that transit data in its map-based representative form, I built this.

It’s definitely overkill for what I need, but I was having fun building the backend out and wanted a nice frontend as well.

Then it needed to work well on my phone, of course, so I opened up amp and got this VehicleDetailsPanel rendering as a slide up bottom sheet. Commit and push, open the live site on my desktop and now the VehicleDetailsPanel is sliding up and to the right on desktop, instead of just to the right.

Several exchanges with Amp later and we got it fixed, I had to look up the tailwindcss-animate docs to know that you can do md:slide-in-from-bottom-0 to remove that animation from medium and higher breakpoints.

I ran my /eval prompt and here’s what Amp had to say

Evaluation: VehicleSidebar Animation Requirements Strengths

Provided the actual file content upfront, eliminating guesswork Clarified requirements iteratively when initial approach was incorrect Third message included the specific Tailwind pattern needed, demonstrating understanding of the solution Weaknesses

First request was ambiguous: “should only slide up and slide down on mobile, not on md: or higher breakpoints” didn’t specify what should happen on desktop (fade? slide left? nothing?) Required two follow-up exchanges to communicate a requirement that could have been stated once No visual context (screenshot or description) of the desired behavior Actionable Improvements

Lead with complete requirements: State what happens at each breakpoint, not just what shouldn’t happen. Specify breakpoint behavior explicitly: “On mobile, slide up/down. On desktop (md+), slide left/right.” Reduce assumption gaps: Mention technical solutions (like Tailwind patterns) if you know them, rather than guiding toward discovery. Minimal Viable Prompt “Fix the sidebar animations in VehicleSidebar.tsx so it slides up/down on mobile and slides left/right on desktop (md:). You need to override/disable the bottom slide animations at the md: breakpoint.”

The word “override” or “disable” signals that animation resets are needed, directing the search toward Tailwind’s -0 suffix pattern without requiring explicit mention.

I stashed my changes, opened up a new thread and tried the MVP and it worked in one shot.

Conclusion

I think this pattern of reflection after a coding session with an agent does a couple things.

- It helps you think like a PM. Being able to better vocalize requirements helps not only with agents, but with real life flesh people you work with.

- It can help teach you new concepts. If you don’t know what a specific pattern is called, the agent can help identify it and help up your vocabulary.

- It reduces costs in the long run. Agents run on tokens, and the fewer tokens (re: less back and forth), the lower your costs will be.

Give my /eval prompt a go, and let me know on Twitter how you like it, or of any improvements that could be made.